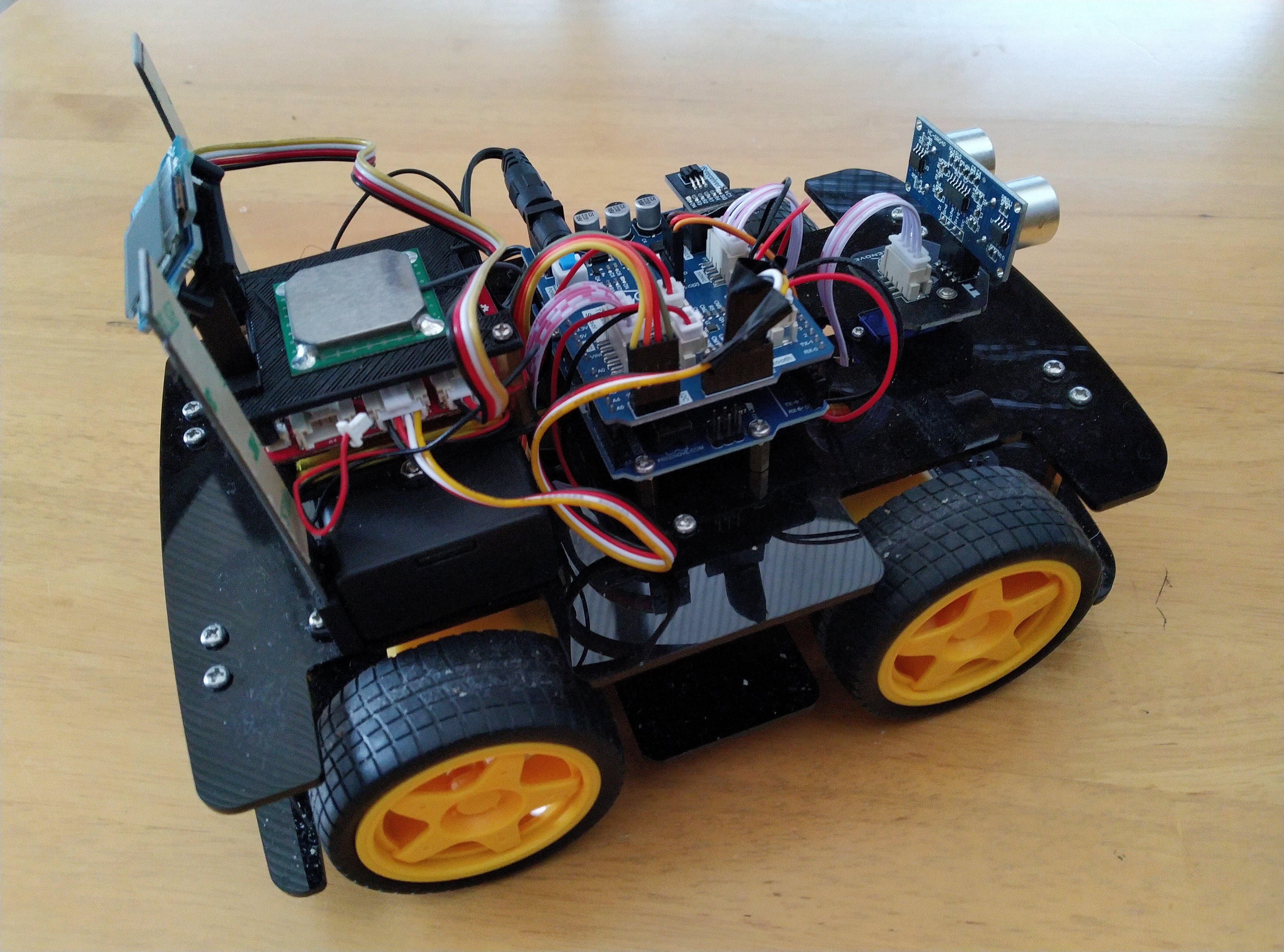

Meet Robot Gordon

I haven’t posted on my lockdown IoT AI at the Edge project for a while, so here’s an update. I decided a “static” 4G GNSS STM32 based device wasn’t enough, so I’ve got it moving on a 4WD autonomous vehicle. Well, right now semi-autonomous, but the plan is to aim to get to a fully autonomous vehicle in increments.

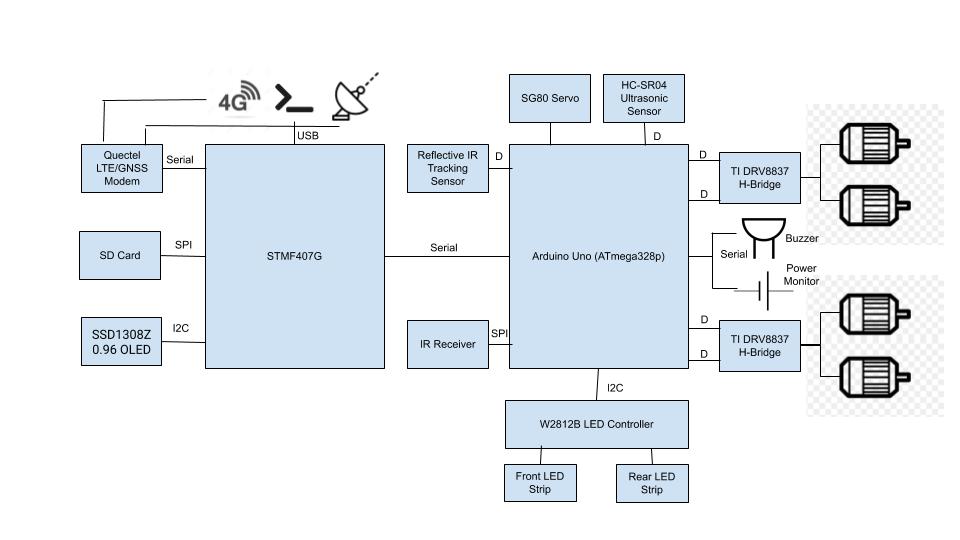

Here’s the (current) Schematic

The Robot is based upon a chassis kit, but with my own Microcontroller architecture and software and added sensors. I’ve 3D printed a number of parts to support mounting the STM32, sensors and antennas.

The Robot is based upon a chassis kit, but with my own Microcontroller architecture and software and added sensors. I’ve 3D printed a number of parts to support mounting the STM32, sensors and antennas.

Currently, the Robot has two Microcontroller subsystems. An ATmega328p running the motor control, IR and Sonar sensing and I’ve repurposed the STM32F407G board with inbuilt Quectel GNSS/4G LTE modem to act as an Internet gateway and mission controller. This also provides GNSS positoning and, in my house at least, the indoor GNSS satellite fix is fairly good.

The STM32 board is pretty powerful for a Microcontroller, running at 168MHz with 192kb SRAM and 1MB Flash. I’ve attached a SD Card reader on the STM32’s SPI bus and an OLED display to the I2C bus. Communication between the ATmega328p and STM32 is via a Serial Bus running at 230400 bps.

Motor control is PWM to all four wheels via two TI DRV8837 H-Bridge drivers. Software is C++ and will be posted to by Github account.

So what does it do?

There are currently two modes, manual drive via an IR Remote and an auto drive with sonar based object detection and avoidance. You can switch in and out of these modes via the IR Remote. All the control and sensor data can be logged to the SD Card and configured to be forwarded over the 4G LTE telemetry link to my Internet server. I’ve also added a UART Console to support debugging and accessing log files. With the 4G modem it also supports SMS, for example texting me when certain events occur - which is amusing.

There are three reflective IR sensors installed on the botton of the chassis. This will support a Line Tracking / Following mode, but as of the date of this article, I haven’t got round to coding that up yet. Getting 4G LTE and GNSS connectivity working was more interesting!

So how does object detection and avoidance work?

Object detection is via an Ultrasonic sensor, specifically a HC-SR04. These sensors are very cheap, but not known for their repeatability. However, with testing and calibration you can get pretty reasonable accuracy. I’m currently getting +/- 2mm between 10 and 500mm. These results are against a hard reflective surface reasonably perpendicular to the sensor. Obviously, dependent on the physics of ultrasonic waves, the nature of the surface the wave is reflecting off will greatly affect the accuracy of these sensors. These sensors have a field of view (FOV) cone of ~21 Deg horizontal and ~4 Deg vertical, hence the sensor is mounted on a Servo with a +/- 60 Deg sweep to provide better coverage for object detection.

So, does it work? Not bad, probably need to post a video of it running so you can see for yourselves. Once I “tuned” the combination of Servo sweep angle, cycle time, and object ahead / not ahead algorithm it copes with most classes of obstacle. The main flaw is that the FOV of the HC-SR04 restricts it detecting objects that are elevated but are still low enough to catch the vehicle. I could potentially solve this by mounting the sensor higher and having it angled slightly downwards, but that would mean 3D printing a whole load of new parts and rerouting the wiring!

So what’s next for this?

As you might expect with a tinkering project, I’m amassing a long list of functions to add to this. There are a number of key functions missing for any serious autonomy, including localised mapping and navigation, potentially using SLAM, and some form of vision recognition. The motors do not have rotary encoders, so there’s no way to measure velocity. I’ve recently purchased an Optical Flow Sensor, specifically a PMW3901MB, as means to measure movement and estimate velocity, potentially in conjuction with an IMU.

But wait, I’m now building another robot!

So, I’ll keep you all posted how this project progresses. However I used my Xmas gift cards to purchase the latest Raspberry Pi 4B and I’ve now got a second Robot project on the go - a Hexapod Spider! As per the 4WD Vehicle, I’ve purchased a basic kit, but plan to rearchitect the software and add additional sensor systems. With a Pi 4B with 4GB of RAM, there’s considerably more potential to add more complex features, including OpenCV based image processing.